CircleCI just published “The New AI-Driven SDLC” and it crystallizes something I’ve been wrestling with for weeks: AI generates work at machine speed, but our validation systems still run at human speed.

Their key insight: “Infrastructure designed for predictable human batches cannot validate the constant stream of AI-generated code that may be syntactically correct but contextually inappropriate.”

I experienced this viscerally two days ago. I spent hours with my tech agents building a complex feature. The next day, I asked it to “clean up the feature branch” and it decided to skip commits and cherry-pick changes. It broke everything. Hours and millions of tokens to untangle.

CircleCI identifies this as the fundamental problem: a capacity mismatch where code generation accelerates dramatically while review and validation remain slow. But here’s what they propose that’s interesting - it’s not about slowing down AI.

It’s about building AI agent feedback loops that connect what happens in production back to the development tools.

That’s exactly what I did after the breaking incident. My immediate next step wasn’t to blame the agent or abandon AI. It was to analyze my context markdown and agent configurations and work towards improvement.

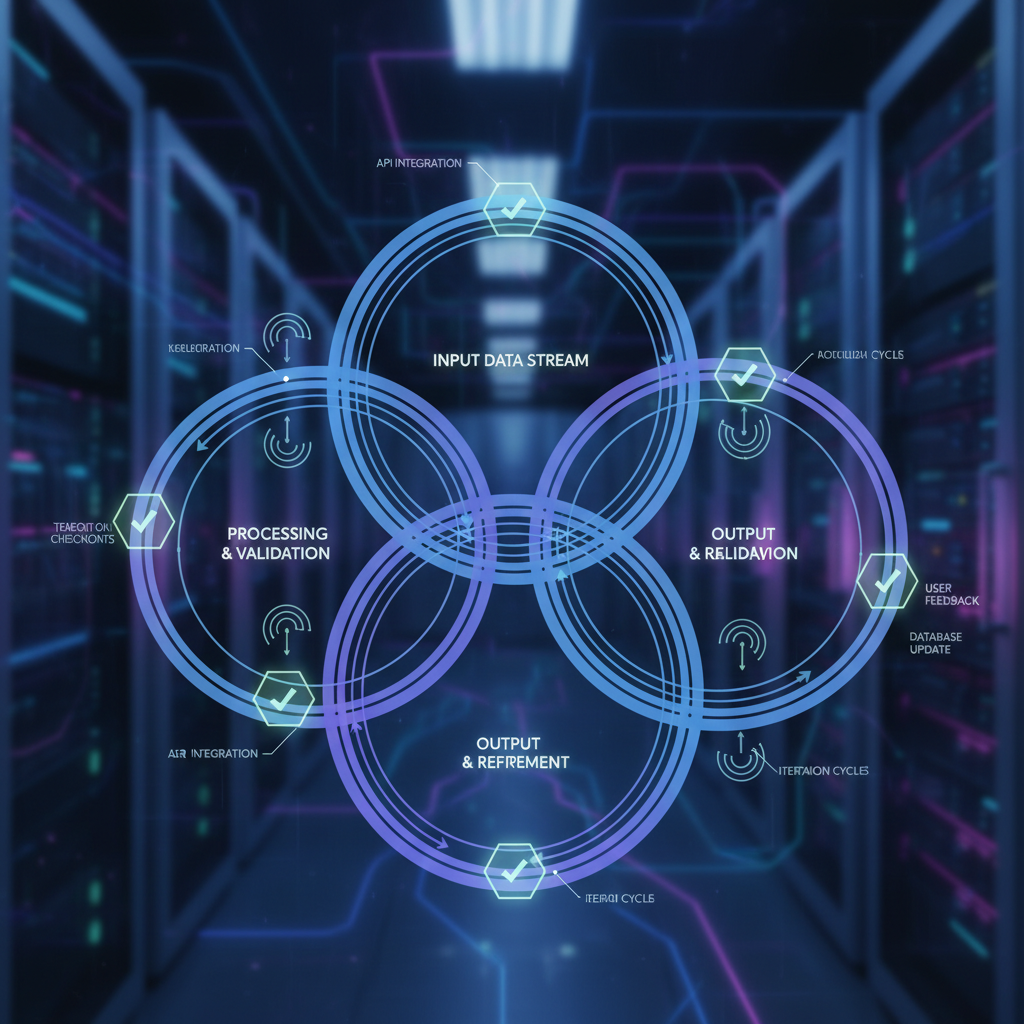

Let me show you what those feedback loops actually look like in practice.

The AI Agent Feedback Loop Architecture

CircleCI talks about this at the infrastructure level - connecting pipeline data back to AI development tools. I’m doing the same thing, but for AI agent orchestration:

1. Context.md as the Source of Truth

My CLAUDE.md file contains the strategic context, operating principles, current priorities. Every agent reads this. When an agent breaks something, I update this context with the lesson learned.

This is context-as-code. Version controlled, explicit, and fed into every agent invocation.

2. Agent Configuration Evolution

I have specialized agents in .claude/agents/ - each with specific expertise and constraints. After the breaking incident, I revised the agent configs to be more explicit about preservation vs. deletion, when to ask vs. act.

The configs themselves become part of the feedback loop. They evolve based on what works and what breaks.

3. Automated Health Checks as Validation

I have scheduled tasks that run health checks daily, generate reports. These are my “CI/CD pipeline” for AI-generated work - they catch problems before I do.

This is validation automation. The same principle as CI/CD, applied to autonomous agent outputs.

4. The Todo List as Execution Trace

Every complex task gets broken into todos. When something breaks, I can trace exactly what the agent thought it was doing vs. what it actually did. That gap is the feedback.

This is execution observability. Making agent decision-making visible and traceable.

This is what CircleCI means by “evolve the entire delivery system iteratively rather than optimizing components in isolation.”

From Execution to Judgment

CircleCI emphasizes that expertise is shifting from execution to judgment - from writing code to judging what AI produces and architecting the guardrails.

This is what I wrote about in “Why Experienced Architects Will Orchestrate AI Agent Teams” - but CircleCI grounds it in SDLC realities. They point out:

- Traditional code review assumes small, focused changesets

- AI generates massive changesets that are syntactically correct

- Human review can’t keep up

- The solution is redesigning reviews for automation and risk-based triage

I’m doing this with my agents:

- High-risk operations (git operations, publishing) require explicit approval

- Low-risk operations (drafting, research) run autonomously

- Context markdown defines the judgment criteria agents should apply

- When agents violate those criteria, I refine the context

This is agent orchestration with built-in feedback loops.

The Real-World Test

Here’s where CircleCI’s theory meets my practice:

They say: “Infrastructure designed for predictable human batches cannot validate the constant stream of AI-generated code.”

I learned: My agent broke the app because it made 50 decisions in sequence without checkpoints. Each decision was locally rational. The combination was catastrophic.

They recommend: “Integrate feedback loops connecting pipeline data back to AI development tools.”

I implemented: After every failure, I document what went wrong in markdown (health-check reports, incident notes) and update agent context/configs. The next agent iteration reads those lessons.

They warn: “The transition challenges professional identity; teams must redefine expertise around judgment rather than execution.”

I’m experiencing: I spend more time curating context, designing agent workflows, and evaluating outputs than I do actually writing code or content. And that’s exactly right.

What AI Agent Monitoring Actually Looks Like

Let me be concrete. Here’s my current feedback loop system:

Daily Cycle

- Scheduled health checks run (automation-health-specialist agent)

- Generate reports in reports/ directory

- I read reports, identify issues

- Update context markdown or agent configs based on findings

- Next day’s agents read updated context

Per-Task Cycle

- Break task into todos (forced decomposition)

- Agent works through todos sequentially

- If failure occurs, todo list shows exactly where

- I analyze the gap between intent and execution

- Refine context/configs to prevent repeat

Weekly Cycle

- Weekly planning agent reviews all health checks, analytics, GitHub issues

- Generates plan based on strategic context

- I review plan, adjust priorities

- Update CLAUDE.md with refined priorities

- All agents read updated priorities

This is exactly what CircleCI describes: “many activities now unfold simultaneously, and feedback flows in multiple directions.”

The Infrastructure You Need

CircleCI focuses on CI/CD infrastructure. For AI agent orchestration, you need:

- Context as Code - CLAUDE.md, agent configs in .claude/agents/, all version controlled

- Execution Observability - Todo lists, health check reports, execution traces

- Automated Validation - Scheduled tasks that verify AI-generated work

- Human Checkpoints - Explicit approval requirements for high-risk operations

- Feedback Documentation - Every failure gets documented and fed back into context

I’m using:

- Claude Code with MCP servers (scheduler, analytics, etc)

- Markdown files for all context and documentation

- GitHub for version control and cross-team communication

- Automated health checks via scheduler MCP

- Manual approval gates for publishing and git operations

Where This Gets Powerful

CircleCI’s thesis is that AI transforms SDLC from sequential to simultaneous. I’m seeing this with my marketing automation:

- Content-publishing-specialist validates blog posts

- SEO-specialist optimizes for search

- Customer-discovery-specialist analyzes feedback

- Weekly-planning-specialist generates plans

- Automation-health-specialist monitors systems

All these agents run in parallel, feed findings back into shared context, inform each other’s work. It’s not a pipeline - it’s a mesh.

And when one breaks something (like my feature branch incident), the feedback loop means every other agent learns from that failure.

The Practical Takeaway

If you’re orchestrating AI agents (for development, marketing, operations, whatever), you need the same feedback loop architecture that CircleCI describes for CI/CD:

- Make context explicit and version-controlled - Don’t keep it in your head

- Break work into observable steps - Todo lists, execution traces, whatever

- Automate validation - Don’t wait for failures to find problems

- Document every failure - And feed it back into agent context

- Iterate the system, not just the agents - Refine context, configs, workflows together

CircleCI is right: “The transition challenges professional identity.” I’m no longer writing blog posts or running analytics queries. I’m curating context that enables agents to do that work reliably.

And when they break things? That’s not a failure. That’s feedback. The loop closes, context improves, next iteration is better.

This is what AI-native work looks like. CircleCI is building it for software delivery. I’m building it for marketing operations. The patterns are the same.

What’s Next

Want to see this in action? My entire marketing automation system is in the brandcast-marketing repo. CLAUDE.md, agent configs, health check reports - all there. It’s messy, it’s evolving, and it breaks sometimes.

But every break makes the system stronger. That’s the feedback loop working.