Back in the early days of the container explosion, I spent the better part of two years on the road across the US and fair chunks of the planet talking about how containers work. People were confused and seeing a lot of misinformation about what containers could or couldn’t do. There was, I’m pretty sure, a start-up that was selling air fryers inside containers (air fryers were new then, too.)

Confusion breeds a lack of confidence in a tool and that leads to adoption inertia. I hate inertia.

I’m seeing the same thing, only 1000 times worse, with agentic development systems. My back can’t survive 100 nights in hotel beds in 2026. So we’re going to do this round virtually.

I’m going to use Gemini CLI for two primary reasons:

- I work for Google Cloud and use it all day every day.

- It’s open source, so we can dig deep into the source code.

When you type ‘gemini’ in your terminal, what actually happens?

Let’s find out!

Starting gemini CLI

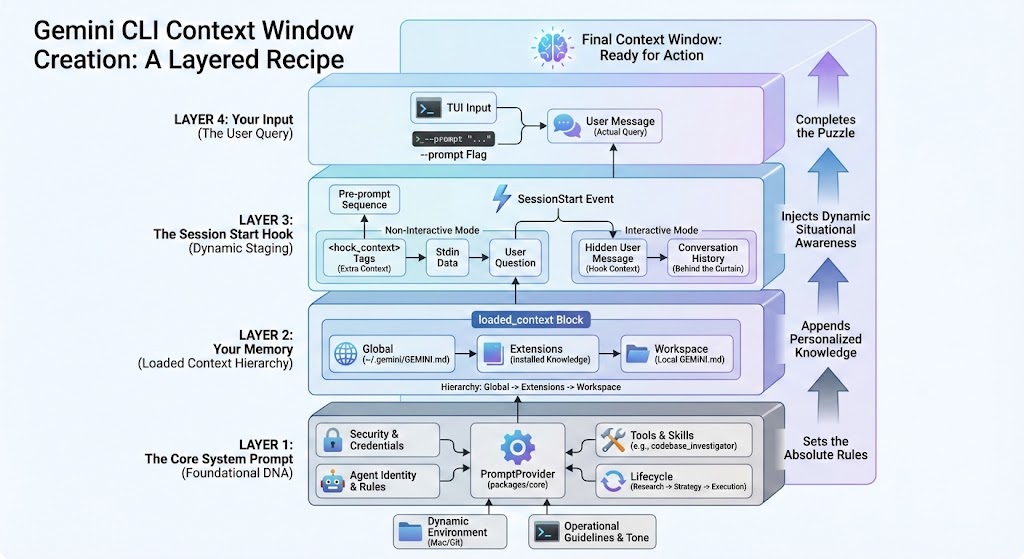

geminiWhen you fire off a command, there isn’t just one generic string of text heading over to the LLM. It’s an assembly line. Information is gathered, prioritized, and stacked before it ever hits the model.

Here is exactly how that context is built, layer by layer.

Layer 1: The Core System Prompt

Think of this as the foundational DNA of the agent. This is where the PromptProvider (living in packages/core) takes the wheel. It sets the absolute rules of engagement.

First, it establishes the agent’s identity and whether it’s running interactively or autonomously. Then it lays down the unbendable mandates: hardcoded rules for security, protecting your credentials, and strict engineering standards. You can actually provide custom prompts to fine-tune your experience.

From there, it bolts on the tools: available sub-agents (like codebase_investigator) and active skills. It defines the primary lifecycle (“Research -> Strategy -> Execution”) and sets the operational guidelines for tone, style, and safety. If you happen to be on a Mac using Seatbelt, or sitting inside a Git repository, it dynamically injects that environment context, too.

This core prompt forms the agent’s entire worldview.

Layer 2: Your Memory

Next up is your personal touch. The system appends the loaded_context block to the system prompt. But it doesn’t just jam it all in there; it respects a strict hierarchy.

It starts with the global context—your foundational preferences living in ~/.gemini/GEMINI.md. Then it layers in knowledge from any installed extensions. Finally, it pulls in your workspace-wide mandates from the local GEMINI.md file in your project root. In Gemini CLI you can also trim this down with an experimental flag experimental.jitContext that loads these files as they’re encountered throughout a project. For large repos and monorepos, this can be a significant improvement in performance.

Broader rules first, specific project requirements last. Sub-directories > Workspace > Extensions > Global.

Layer 3: The Session Start Hook

Before you even drop your first query, Gemini fires off a SessionStart event. This is where the system gets dynamic.

If you’re running in non-interactive mode and a hook returns extra context, the framework wraps it in <hook_context> tags and shoves it right in front of your actual prompt. The sequence looks like this: Hook Context -> Stdin Data -> User Question.

If you’re in interactive mode, that hook context gets slipped into the conversation history as a hidden user message before your first prompt is ever submitted. It’s setting the stage behind the curtain.

Layer 4: Your Input

Finally, we get to you. The actual query you typed into the TUI or passed via the --prompt flag. This final piece of the puzzle is sent across as the actual “User” message.

And that’s it. That is the exact recipe of context that gives the agent enough situational awareness to actually be useful, rather than just another chatbot hallucinating about air fryers inside of containers.

Why this matters

Is any of this going to stop you from using Gemini CLI? No. Is it going to stop you from using Copilot? No. Is it going to stop you from using Cursor? No. Is it going to stop you from using Claude? No.

But it will help you understand how these systems work and how to get the most out of them. It will help you understand why they do what they do and how to get them to do what you want them to do.

Most importantly, it helps you understand that the agentic systems we’re building today are not magic. They are just software.

In the next session, we’ll take a look at how subagents work to parallelize the work of the agent and keep your brand new context window clean.